Discriminative model

| Machine learning and data mining |

|---|

|

|

Machine-learning venues |

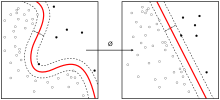

Discriminative models, also referred to as conditional models, are a class of models used in statistical classification, especially in supervised machine learning. A discriminative classifier tries to model by just depending on the observed data while learning how to do the classification from the given statistics. The approaches used in supervised learning can be categorized into discriminative models or generative models. Comparing with the generative models, discriminative model makes fewer assumptions on the distributions but depends heavily on the quality of the data. For example, given a set of labeled pictures of dog and rabbit, discriminative models will be matching a new, unlabeled picture to a most similar labeled picture and then give out the label class, a dog or a rabbit. However, generative will develop a model which should be able to output a class label to the unlabeled picture from the assumption they made, like all rabbits have red eyes.The typical discriminative learning approaches include Logistic Regression(LR), Support Vector Machine(SVM), conditional random fields(CRFs) (specified over an undirected graph), and others. The typical generative model approaches contain Naive Bayes, Gaussian Mixture Model, and others.

Contents

Definition[edit]

Unlike the generative modelling, which studies from the joint probability , the discriminative modeling studies the or the direct maps the given unobserved variable (target) a class label depended on the observed variables (training samples). In practical field of object recognition, is likely to be a vector (i.e. raw pixels, features extracted from image or so). Within a probabilistic framework, this is done by modeling the conditional probability distribution , which can be used for predicting from . Note that there is still distinction between the conditional model and the discriminative model, though more often they are simply categorised as discriminative model.

Pure Discriminative Model vs. Conditional Model[edit]

Conditional Model models the conditional probability distribution while the traditional discriminative model aims to optimize on mapping the input around the most similar trained samples.[1]

Typical Discriminative Modelling Approaches[2][edit]

The following approach is based on the assumption that it is given the training data-set , where is the corresponding output for the input .

Linear Classifier[edit]

We intend to use the function to simulate the behavior of what we observed from the training data-set by the Linear Classifier method. Using the joint feature vector , the decision function is defined as:

According to Memisevic's interpretation[2], , which is also , computes a score which measures the computability of the input with the potential output . Then the determines the class with the highest score.

Logistic Regression (LR)[edit]

Since the 0-1 loss function is a commonly used one in the decision theory, the conditional probability distribution , where is a parameter vector for optimizing the training data, could be reconsidered as following for the logistics regression model:

, with

The equation above is the Logistic Regression. Notice that a major distinction between models is their way of introducing posterior probability. Posterior probability is inferred from the parametric model. We then can maximize the parameter by following equation:

It could also be replaced by the log-loss equation below:

Since the log-loss is differentiable, gradient-based method is a possible way to optimize the model. Global optimum is guaranteed because the objective function is convex. Gradient of log likelihood is represented by:

where is the expectation of .

The above method will provide efficient computation for the relative small number of classification.

Support Vector Machine[edit]

Another continuous (but not differentiable) alternative to the 0/1-loss is the ’hinge-loss’, which can be defined as the following equation

The hinge loss measures the difference between the maximal confidence that the classifier has over all classes and the confidence it has in the correct class. In computing this maximum, all wrong classes get a ’head start’ by adding 1 to the confidence. As a result, the hinge loss is 0 if the confidence in the correct class is by at least 1 greater than the confidence in the closest follow-up. Even though the hinge-loss is not differentiable, it can also give rise to a tractable variant of the 0/1- loss based learning problem, since the hinge-loss allows it to recast to the equivalent constrained optimization problem.

Contrast with Generative Model[edit]

Contrast in Approaches[edit]

Let's say we are given the class labels(classification) and feature variables, , as the training samples.

Generative model takes the joint probability , where is the input and is the label, and predicts the most possible known label for the unknown variable using Bayes Rules.[3]

Discriminative models, as opposed to generative models, do not allow one to generate samples from the joint distribution of observed and target variables. However, for tasks such as classification and regression that do not require the joint distribution, discriminative models can yield superior performance (in part because they have fewer variables to compute).[4][5][3] On the other hand, generative models are typically more flexible than discriminative models in expressing dependencies in complex learning tasks. In addition, most discriminative models are inherently supervised and cannot easily support unsupervised learning. Application-specific details ultimately dictate the suitability of selecting a discriminative versus generative model.

Discriminative models and generative models also differ in introducing the posterior possibility. [6] To maintain the least expected loss, the minimization of result's misclassification should be acquired. In the discriminative model, the posterior probabilities, , is inferred from a parametric model, where the parameters come from the training data. Points of estimation of the parameters are obtained from the maximization of likelihood or distribution computation over the parameters. On the other hand, considering that the generative models focus on the joint probability, the class posterior possibility is considered in Bayes' Theorem, which is .[6]

Advantages vs. Disadvantages in Application[edit]

In the repeated experiments, Logistics Regression and Naive Bayes are applied here for different models on binary classification task, discriminative learning results lower asymptotic errors, while generative one results in higher asymptotic errors faster.[3] However, in Ulusoy and Bishop's joint work, Comparison of Generative and Discriminative Techniques for Object Detection and Classification, they state that the above statement is true only when the model is the appropriate one for data (i.e.the data distribution is correctly modeled by the generative model).

Advantages[edit]

Significant advantages of using discriminative modeling are:

- Higher accuracy, which mostly leads to better learning result.

- Allows simplification of the input and provides a direct approach to

- Saves calculation resource

- Generates lower asymptotic errors

Compared with the advantages of using generative modeling:

- Takes all data into consideration, which could result in slower processing as a disadvantage

- Requires less training samples

- A flexible framework that could easily cooperate with other needs of the application

Disadvantages[edit]

- Training method usually requires multiple numerical optimization techniques[1]

- Similarly, by the definition, the discriminative model will need the combination of multiple subtasks for a solving complex real-world problem[2]

Optimizations in Applications[edit]

Since both advantages and disadvantages present on the two way of modeling, combining both approaches will be a good modeling in practice. For example, in Marras' article A Joint Discriminative Generative Model for Deformable Model Construction and Classification[7], he and his coauthors apply the combination of two modelings on face classification of the models, and receive a higher accuracy than the traditional approach.

Similarly, Kelm[8] also proposed the combination of two modelings for pixel classification in his article Combining Generative and Discriminative Methods for Pixel Classification with Multi-Conditional Learning.

During the process of extracting the discriminative features prior to the clustering, Principal Component Analysis(PCA), though commonly used, is not a necessarily discriminative approach. In contrast, LDA is a discriminative one[9]. Linear discriminant analysis(LDA), provides an efficient way of eliminating the disadvantage we list above. As we know, the discriminative model needs a combination of multiple subtasks before classification, and LDA provides appropriate solution towards this problem by reducing dimension.

In Beyerlein's paper, DISCRIMINATIVE MODEL COMBINATION[10], the discriminative model combination provides a new approach in auto speech recognition. It not only helps to optimize the integration of various kinds of models into one log-linear posterior probability distribution. The combination also aims at minimizing the empirical word error rate of training samples.

In the article, A Unified and Discriminative Model for Query Refinement[11], Guo and his partners use a unified discriminative model in query refinement using linear classifier, and successfully obtain a much higher accuracy rate. The experiment they design also consider generative model as a comparison with the unified model. Just as expected in the real-world application, the generative model perform the poorest comparing to the other models, including the models without their improvement.

Types[edit]

This article is in a list format that may be better presented using prose. (February 2012) |

Examples of discriminative models used in machine learning include:

- Logistic regression, a type of generalized linear regression used for predicting binary or categorical outputs (also known as maximum entropy classifiers)

- Support vector machines

- Boosting (meta-algorithm)

- Conditional random fields

- Linear regression

- Neural networks

- Linear Discriminant Analysis (The result could be used as a linear classifier.)

- Random forests

- Perceptrons

See also[edit]

References[edit]

- ^ a b Ballesteros, Miguel. "Discriminative Models" (PDF). Retrieved October 28, 2018.

- ^ a b c Memisevic, Roland (December 21, 2006). "An introduction to structured discriminative learning". Retrieved October 29, 2018.

- ^ a b c Ng, Andrew Y.; Jordan, Michael I. (2001). On Discriminative vs. Generative classifiers: A comparison of logistic regression and naive Bayes.

- ^ Singla, Parag; Domingos, Pedro (2005). "Discriminative Training of Markov Logic Networks". Proceedings of the 20th National Conference on Artificial Intelligence - Volume 2. AAAI'05. Pittsburgh, Pennsylvania: AAAI Press: 868–873. ISBN 157735236X.

- ^ J. Lafferty, A. McCallum, and F. Pereira. Conditional Random Fields: Probabilistic Models for Segmenting and Labeling Sequence Data. In ICML, 2001.

- ^ a b Ulusoy, Ilkay (May 2016). "Comparison of Generative and Discriminative Techniques for Object Detection and Classification" (PDF). Retrieved October 30, 2018.

- ^ Marras, Ioannis (2017). "A Joint Discriminative Generative Model for Deformable Model Construction and Classification" (PDF). Retrieved 5 November 2018.

- ^ Kelm, B. Michael. "Combining Generative and Discriminative Methods for Pixel Classification with Multi-Conditional Learning" (PDF). Retrieved 5 November 2018.

- ^ Wang, Zhangyang (2015). "A Joint Optimization Framework of Sparse Coding and Discriminative Clustering" (PDF). Retrieved 5 November 2018.

- ^ Beyerlein, Peter. "DISCRIMINATIVE MODEL COMBINATION". Retrieved 5 November 2018.

- ^ Guo, Jiafeng. "A Unified and Discriminative Model for Query Refinement" (PDF). Retrieved 5 November 2018.

| This statistics-related article is a stub. You can help Wikipedia by expanding it. |

| This computer science article is a stub. You can help Wikipedia by expanding it. |