Computational complexity of mathematical operations

This article needs additional citations for verification. (April 2015) (Learn how and when to remove this template message) |

The following tables list the computational complexity of various algorithms for common mathematical operations.

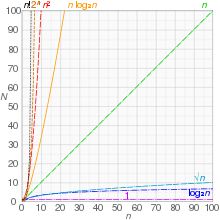

Here, complexity refers to the time complexity of performing computations on a multitape Turing machine.[1] See big O notation for an explanation of the notation used.

Note: Due to the variety of multiplication algorithms, M(n) below stands in for the complexity of the chosen multiplication algorithm.

Contents

Arithmetic functions[edit]

| Operation | Input | Output | Algorithm | Complexity |

|---|---|---|---|---|

| Addition | Two n-digit numbers N, N | One n+1-digit number | Schoolbook addition with carry | Θ(n), Θ(log(N)) |

| Subtraction | Two n-digit numbers N, N | One n+1-digit number | Schoolbook subtraction with borrow | Θ(n), Θ(log(N)) |

| Multiplication | Two n-digit numbers |

One 2n-digit number | Schoolbook long multiplication | O(n2) |

| Karatsuba algorithm | O(n1.585) | |||

| 3-way Toom–Cook multiplication | O(n1.465) | |||

| k-way Toom–Cook multiplication | O(nlog (2k − 1)/log k) | |||

| Mixed-level Toom–Cook (Knuth 4.3.3-T)[2] | O(n 2√2 log n log n) | |||

| Schönhage–Strassen algorithm | O(n log n log log n) | |||

| Fürer's algorithm[3] | O(n log n 22 log* n) | |||

| Division | Two n-digit numbers | One n-digit number | Schoolbook long division | O(n2) |

| Burnikel-Ziegler Divide-and-Conquer Division [4] | O(M(n) log n) | |||

| Newton–Raphson division | O(M(n)) | |||

| Square root | One n-digit number | One n-digit number | Newton's method | O(M(n)) |

| Modular exponentiation | Two n-digit numbers and a k-bit exponent | One n-digit number | Repeated multiplication and reduction | O(M(n) 2k) |

| Exponentiation by squaring | O(M(n) k) | |||

| Exponentiation with Montgomery reduction | O(M(n) k) |

Algebraic functions[edit]

| Operation | Input | Output | Algorithm | Complexity |

|---|---|---|---|---|

| Polynomial evaluation | One polynomial of degree n with fixed-size polynomial coefficients | One fixed-size number | Direct evaluation | Θ(n) |

| Horner's method | Θ(n) | |||

| Polynomial gcd (over Z[x] or F[x]) | Two polynomials of degree n with fixed-size polynomial coefficients | One polynomial of degree at most n | Euclidean algorithm | O(n2) |

| Fast Euclidean algorithm [5] | O(M(n) log n) |

Special functions[edit]

Many of the methods in this section are given in Borwein & Borwein.[6]

Elementary functions[edit]

The elementary functions are constructed by composing arithmetic operations, the exponential function (exp), the natural logarithm (log), trigonometric functions (sin, cos), and their inverses. The complexity of an elementary function is equivalent to that of its inverse, since all elementary functions are analytic and hence invertible by means of Newton's method. In particular, if either exp or log in the complex domain can be computed with some complexity, then that complexity is attainable for all other elementary functions.

Below, the size n refers to the number of digits of precision at which the function is to be evaluated.

| Algorithm | Applicability | Complexity |

|---|---|---|

| Taylor series; repeated argument reduction (e.g. exp(2x) = [exp(x)]2) and direct summation | exp, log, sin, cos, arctan | O(M(n) n1/2) |

| Taylor series; FFT-based acceleration | exp, log, sin, cos, arctan | O(M(n) n1/3 (log n)2) |

| Taylor series; binary splitting + bit burst method[7] | exp, log, sin, cos, arctan | O(M(n) (log n)2) |

| Arithmetic–geometric mean iteration[8] | exp, log, sin, cos, arctan | O(M(n) log n) |

It is not known whether O(M(n) log n) is the optimal complexity for elementary functions. The best known lower bound is the trivial bound Ω(M(n)).

Non-elementary functions[edit]

| Function | Input | Algorithm | Complexity |

|---|---|---|---|

| Gamma function | n-digit number | Series approximation of the incomplete gamma function | O(M(n) n1/2 (log n)2) |

| Fixed rational number | Hypergeometric series | O(M(n) (log n)2) | |

| m/24, m an integer | Arithmetic-geometric mean iteration | O(M(n) log n) | |

| Hypergeometric function pFq | n-digit number | (As described in Borwein & Borwein) | O(M(n) n1/2 (log n)2) |

| Fixed rational number | Hypergeometric series | O(M(n) (log n)2) |

Mathematical constants[edit]

This table gives the complexity of computing approximations to the given constants to n correct digits.

| Constant | Algorithm | Complexity |

|---|---|---|

| Golden ratio, φ | Newton's method | O(M(n)) |

| Square root of 2, √2 | Newton's method | O(M(n)) |

| Euler's number, e | Binary splitting of the Taylor series for the exponential function | O(M(n) log n) |

| Newton inversion of the natural logarithm | O(M(n) log n) | |

| Pi, π | Binary splitting of the arctan series in Machin's formula | O(M(n) (log n)2) |

| Salamin–Brent algorithm | O(M(n) log n) | |

| Euler's constant, γ | Sweeney's method (approximation in terms of the exponential integral) | O(M(n) (log n)2) |

Number theory[edit]

Algorithms for number theoretical calculations are studied in computational number theory.

| Operation | Input | Output | Algorithm | Complexity |

|---|---|---|---|---|

| Greatest common divisor | Two n-digit numbers | One number with at most n digits | Euclidean algorithm | O(n2) |

| Binary GCD algorithm | O(n2) | |||

| Left/right k-ary binary GCD algorithm[9] | O(n2/ log n) | |||

| Stehlé–Zimmermann algorithm[10] | O(M(n) log n) | |||

| Schönhage controlled Euclidean descent algorithm[11] | O(M(n) log n) | |||

| Jacobi symbol | Two n-digit numbers | 0, −1, or 1 | ||

| Schönhage controlled Euclidean descent algorithm[12] | O(M(n) log n) | |||

| Stehlé–Zimmermann algorithm[13] | O(M(n) log n) | |||

| Factorial | A fixed-size number m | One O(m log m)-digit number | Bottom-up multiplication | O(M(m2) log m) |

| Binary splitting | O(M(m log m) log m) | |||

| Exponentiation of the prime factors of m | O(M(m log m) log log m),[14] O(M(m log m))[1] |

Matrix algebra[edit]

The following complexity figures assume that arithmetic with individual elements has complexity O(1), as is the case with fixed-precision floating-point arithmetic or operations on a finite field.

| Operation | Input | Output | Algorithm | Complexity |

|---|---|---|---|---|

| Matrix multiplication | Two n×n matrices | One n×n matrix | Schoolbook matrix multiplication | O(n3) |

| Strassen algorithm | O(n2.807) | |||

| Coppersmith–Winograd algorithm | O(n2.376) | |||

| Optimized CW-like algorithms[15][16][17] | O(n2.373) | |||

| Matrix multiplication | One n×m matrix &

one m×p matrix |

One n×p matrix | Schoolbook matrix multiplication | O(nmp) |

| Matrix inversion* | One n×n matrix | One n×n matrix | Gauss–Jordan elimination | O(n3) |

| Strassen algorithm | O(n2.807) | |||

| Coppersmith–Winograd algorithm | O(n2.376) | |||

| Optimized CW-like algorithms | O(n2.373) | |||

| Singular value decomposition | One m×n matrix | One m×m matrix, one m×n matrix, & one n×n matrix |

O(mn2) (m ≥ n) | |

| One m×r matrix, one r×r matrix, & one n×r matrix |

||||

| Determinant | One n×n matrix | One number | Laplace expansion | O(n!) |

| Division-free algorithm[18] | O(n4) | |||

| LU decomposition | O(n3) | |||

| Bareiss algorithm | O(n3) | |||

| Fast matrix multiplication[19] | O(n2.373) | |||

| Back substitution | Triangular matrix | n solutions | Back substitution[20] | O(n2) |

In 2005, Henry Cohn, Robert Kleinberg, Balázs Szegedy, and Chris Umans showed that either of two different conjectures would imply that the exponent of matrix multiplication is 2.[21]

^* Because of the possibility of blockwise inverting a matrix, where an inversion of an n×n matrix requires inversion of two half-sized matrices and six multiplications between two half-sized matrices, and since matrix multiplication has a lower bound of Ω(n2 log n) operations,[22] it can be shown that a divide and conquer algorithm that uses blockwise inversion to invert a matrix runs with the same time complexity as the matrix multiplication algorithm that is used internally.[23]

References[edit]

- ^ a b A. Schönhage, A.F.W. Grotefeld, E. Vetter: Fast Algorithms—A Multitape Turing Machine Implementation, BI Wissenschafts-Verlag, Mannheim, 1994

- ^ D. Knuth. The Art of Computer Programming, Volume 2. Third Edition, Addison-Wesley 1997.

- ^ Martin Fürer. Faster Integer Multiplication Archived 2013-04-25 at the Wayback Machine. Proceedings of the 39th Annual ACM Symposium on Theory of Computing, San Diego, California, USA, June 11–13, 2007, pp. 55–67.

- ^ Christoph Burnikel and Joachim Ziegler Fast Recursive Division Im Stadtwald , D- Saarbrucken 1998.

- ^ http://planetmath.org/fasteuclideanalgorithm

- ^ J. Borwein & P. Borwein. Pi and the AGM: A Study in Analytic Number Theory and Computational Complexity. John Wiley 1987.

- ^ David and Gregory Chudnovsky. Approximations and complex multiplication according to Ramanujan. Ramanujan revisited, Academic Press, 1988, pp 375–472.

- ^ Richard P. Brent, Multiple-precision zero-finding methods and the complexity of elementary function evaluation, in: Analytic Computational Complexity (J. F. Traub, ed.), Academic Press, New York, 1975, 151–176.

- ^ J. Sorenson. (1994). "Two Fast GCD Algorithms". Journal of Algorithms. 16 (1): 110–144. doi:10.1006/jagm.1994.1006.

- ^ R. Crandall & C. Pomerance. Prime Numbers – A Computational Perspective. Second Edition, Springer 2005.

- ^ Möller N (2008). "On Schönhage's algorithm and subquadratic integer gcd computation" (PDF). Mathematics of Computation. 77 (261): 589–607. Bibcode:2008MaCom..77..589M. doi:10.1090/S0025-5718-07-02017-0.

- ^ Bernstein D J. "Faster Algorithms to Find Non-squares Modulo Worst-case Integers".

- ^ Richard P. Brent; Paul Zimmermann (2010). "An O(M(n) log n) algorithm for the Jacobi symbol". arXiv:1004.2091.

- ^ Borwein, P. (1985). "On the complexity of calculating factorials". Journal of Algorithms. 6: 376–380. doi:10.1016/0196-6774(85)90006-9.

- ^ Davie, A.M.; Stothers, A.J. (2013), "Improved bound for complexity of matrix multiplication", Proceedings of the Royal Society of Edinburgh, 143A: 351–370, doi:10.1017/S0308210511001648

- ^ Vassilevska Williams, Virginia (2011), Breaking the Coppersmith-Winograd barrier (PDF)

- ^ Le Gall, François (2014), "Powers of tensors and fast matrix multiplication", Proceedings of the 39th International Symposium on Symbolic and Algebraic Computation (ISSAC 2014), arXiv:1401.7714, Bibcode:2014arXiv1401.7714L

- ^ http://page.mi.fu-berlin.de/rote/Papers/pdf/Division-free+algorithms.pdf

- ^ Aho, Alfred V.; Hopcroft, John E.; Ullman, Jeffrey D. (1974), The Design and Analysis of Computer Algorithms, Addison-Wesley, Theorem 6.6, p. 241

- ^ J. B. Fraleigh and R. A. Beauregard, "Linear Algebra," Addison-Wesley Publishing Company, 1987, p 95.

- ^ Henry Cohn, Robert Kleinberg, Balazs Szegedy, and Chris Umans. Group-theoretic Algorithms for Matrix Multiplication. arXiv:math.GR/0511460. Proceedings of the 46th Annual Symposium on Foundations of Computer Science, 23–25 October 2005, Pittsburgh, PA, IEEE Computer Society, pp. 379–388.

- ^ Ran Raz. On the complexity of matrix product. In Proceedings of the thirty-fourth annual ACM symposium on Theory of computing. ACM Press, 2002. doi:10.1145/509907.509932.

- ^ T.H. Cormen, C.E. Leiserson, R.L. Rivest, C. Stein, Introduction to Algorithms, 3rd ed., MIT Press, Cambridge, MA, 2009, § 28.2.

Further reading[edit]

- Brent, Richard P.; Zimmermann, Paul (2010). Modern Computer Arithmetic. Cambridge University Press. ISBN 978-0-521-19469-3.

- Knuth, Donald Ervin (1997). The Art of Computer Programming. Volume 2: Seminumerical Algorithms (3rd ed.). Addison-Wesley. ISBN 0-201-89684-2.