Rewriting

In mathematics, computer science, and logic, rewriting covers a wide range of (potentially non-deterministic) methods of replacing subterms of a formula with other terms. The objects of focus for this article include rewriting systems (also known as rewrite systems, rewrite engines[1] or reduction systems). In their most basic form, they consist of a set of objects, plus relations on how to transform those objects.

Rewriting can be non-deterministic. One rule to rewrite a term could be applied in many different ways to that term, or more than one rule could be applicable. Rewriting systems then do not provide an algorithm for changing one term to another, but a set of possible rule applications. When combined with an appropriate algorithm, however, rewrite systems can be viewed as computer programs, and several theorem provers[2] and declarative programming languages are based on term rewriting.[3][4]

Contents

Intuitive examples[edit]

Logic[edit]

In logic, the procedure for obtaining the conjunctive normal form (CNF) of a formula can be implemented as a rewriting system.[5] The rules of an example of such a system would be:

where the symbol () indicates that an expression matching the left hand side of the rule can be rewritten to one formed by the right hand side, and the symbols each denote a subexpression. In such a system, each rule is chosen so that the left side is equivalent to the right side, and consequently when the left side matches a subexpression, performing a rewrite of that subexpression from left to right maintains logical consistency and value of the entire expression.

Linguistics[edit]

In linguistics, rewrite rules, also called phrase structure rules, are used in some systems of generative grammar,[6] as a means of generating the grammatically correct sentences of a language. Such a rule typically takes the form A → X, where A is a syntactic category label, such as noun phrase or sentence, and X is a sequence of such labels or morphemes, expressing the fact that A can be replaced by X in generating the constituent structure of a sentence. For example, the rule S → NP VP means that a sentence can consist of a noun phrase followed by a verb phrase; further rules will specify what sub-constituents a noun phrase and a verb phrase can consist of, and so on.

Abstract rewriting systems[edit]

From the above examples, it is clear that we can think of rewriting systems in an abstract manner. We need to specify a set of objects and the rules that can be applied to transform them. The most general (unidimensional) setting of this notion is called an abstract reduction system, (abbreviated ARS), although more recently authors use abstract rewriting system as well.[7] (The preference for the word "reduction" here instead of "rewriting" constitutes a departure from the uniform use of "rewriting" in the names of systems that are particularizations of ARS. Because the word "reduction" does not appear in the names of more specialized systems, in older texts reduction system is a synonym for ARS).[8]

An ARS is simply a set A, whose elements are usually called objects, together with a binary relation on A, traditionally denoted by →, and called the reduction relation, rewrite relation[9] or just reduction.[8] This (entrenched) terminology using "reduction" is a little misleading, because the relation is not necessarily reducing some measure of the objects; this will become more apparent when we discuss string-rewriting systems further in this article.

Example 1. Suppose the set of objects is T = {a, b, c} and the binary relation is given by the rules a → b, b → a, a → c, and b → c. Observe that these rules can be applied to both a and b in any fashion to get the term c. Such a property is clearly an important one. Note also, that c is, in a sense, a "simplest" term in the system, since nothing can be applied to c to transform it any further. This example leads us to define some important notions in the general setting of an ARS. First we need some basic notions and notations.[10]

- is the transitive closure of , where = is the identity relation, i.e. is the smallest preorder (reflexive and transitive relation) containing . It is also called the reflexive transitive closure of .

- is , that is the union of the relation → with its converse relation, also known as the symmetric closure of .

- is the transitive closure of , that is is the smallest equivalence relation containing . It is also known as the reflexive transitive symmetric closure of .

Normal forms, joinability and the word problem[edit]

An object x in A is called reducible if there exists some other y in A such that ; otherwise it is called irreducible or a normal form. An object y is called a normal form of x if , and y is irreducible. If x has a unique normal form, then this is usually denoted with . In example 1 above, c is a normal form, and . If every object has at least one normal form, the ARS is called normalizing.

A related, but weaker notion than the existence of normal forms is that of two objects being joinable: x and y are said to be joinable if there exists some z with the property that . From this definition, it is apparent that one may define the joinability relation as , where is the composition of relations. Joinability is usually denoted, somewhat confusingly, also with , but in this notation the down arrow is a binary relation, i.e. we write if x and y are joinable.

One of the important problems that may be formulated in an ARS is the word problem: given x and y, are they equivalent under ? This is a very general setting for formulating the word problem for the presentation of an algebraic structure. For instance, the word problem for groups is a particular case of an ARS word problem. Central to an "easy" solution for the word problem is the existence of unique normal forms: in this case if two objects have the same normal form, then they are equivalent under . The word problem for an ARS is undecidable in general.

The Church–Rosser property and confluence[edit]

An ARS is said to possess the Church–Rosser property if implies . In words, the Church–Rosser property means that any two equivalent objects are joinable. Alonzo Church and J. Barkley Rosser proved in 1936 that lambda calculus has this property;[11] hence the name of the property.[12] (That lambda calculus has this property is also known as the Church–Rosser theorem.) In an ARS with the Church–Rosser property the word problem may be reduced to the search for a common successor. In a Church–Rosser system, an object has at most one normal form; that is the normal form of an object is unique if it exists, but it may well not exist.

Several different properties are equivalent to the Church–Rosser property, but may be simpler to check in some particular setting. In particular, confluence is equivalent to Church–Rosser. An ARS is said:

- confluent if for all w, x, and y in A, implies . Roughly speaking, confluence says that no matter how two paths diverge from a common ancestor (w), the paths are joining at some common successor. This notion may be refined as property of a particular object w, and the system called confluent if all its elements are confluent.

- locally confluent if for all w, x, and y in A, implies . This property is sometimes called weak confluence.

Theorem. For an ARS the following conditions are equivalent: (i) it has the Church–Rosser property, (ii) it is confluent.[13]

Corollary.[14] In a confluent ARS if then

- If both x and y are normal forms, then x = y.

- If y is a normal form, then

Because of these equivalences, a fair bit of variation in definitions is encountered in the literature. For instance, in Bezem et al. 2003 the Church–Rosser property and confluence are defined to be synonymous and identical to the definition of confluence presented here; Church–Rosser as defined here remains unnamed, but is given as an equivalent property; this departure from other texts is deliberate.[15] Because of the above corollary, in a confluent ARS one may define a normal form y of x as an irreducible y with the property that . This definition, found in Book and Otto, is equivalent to common one given here in a confluent system, but it is more inclusive [note 2] more in a non-confluent ARS.

Local confluence on the other hand is not equivalent with the other notions of confluence given in this section, but it is strictly weaker than confluence. The relation is locally confluent, but not confluent, as and are equivalent, but not joinable.[16]

Termination and convergence[edit]

An abstract rewriting system is said to be terminating or noetherian if there is no infinite chain . In a terminating ARS, every object has at least one normal form, thus it is normalizing. The converse is not true. In example 1 for instance, there is an infinite rewriting chain, namely , even though the system is normalizing. A confluent and terminating ARS is called convergent. In a convergent ARS, every object has a unique normal form.

Theorem (Newman's Lemma): A terminating ARS is confluent if and only if it is locally confluent.

String rewriting systems[edit]

A string rewriting system (SRS), also known as semi-Thue system, exploits the free monoid structure of the strings (words) over an alphabet to extend a rewriting relation, to all strings in the alphabet that contain left- and respectively right-hand sides of some rules as substrings. Formally a semi-Thue systems is a tuple where is a (usually finite) alphabet, and is a binary relation between some (fixed) strings in the alphabet, called rewrite rules. The one-step rewriting relation relation induced by on is defined as: for any strings if and only if there exist such that , , and . Since is a relation on , the pair fits the definition of an abstract rewriting system. Obviously is subset of . If the relation is symmetric, then the system is called a Thue system.

In a SRS, the reduction relation is compatible with the monoid operation, meaning that implies for all strings . Similarly, the reflexive transitive symmetric closure of , denoted , is a congruence, meaning it is an equivalence relation (by definition) and it is also compatible with string concatenation. The relation is called the Thue congruence generated by . In a Thue system, i.e. if is symmetric, the rewrite relation coincides with the Thue congruence .

The notion of a semi-Thue system essentially coincides with the presentation of a monoid. Since is a congruence, we can define the factor monoid of the free monoid by the Thue congruence in the usual manner. If a monoid is isomorphic with , then the semi-Thue system is called a monoid presentation of .

We immediately get some very useful connections with other areas of algebra. For example, the alphabet {a, b} with the rules { ab → ε, ba → ε }, where ε is the empty string, is a presentation of the free group on one generator. If instead the rules are just { ab → ε }, then we obtain a presentation of the bicyclic monoid. Thus semi-Thue systems constitute a natural framework for solving the word problem for monoids and groups. In fact, every monoid has a presentation of the form , i.e. it may always be presented by a semi-Thue system, possibly over an infinite alphabet.

The word problem for a semi-Thue system is undecidable in general; this result is sometimes known as the Post-Markov theorem.[17]

Term rewriting systems[edit]

This section needs expansion. You can help by adding to it. (October 2009) |

A term rewriting system (TRS) is a rewriting system whose objects are terms, which are expressions with nested sub-expressions. For example, the system shown under § Logic above is a term rewriting system. The terms in this system are composed of binary operators and and the unary operator . Also present in the rules are variables, these each represent any possible term (though a single variable always represents the same term throughout a single rule).

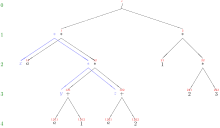

In contrast to string rewriting systems, whose objects are sequences of symbols, the objects of a term rewriting system form a term algebra. A term can be visualized as a tree of symbols, the set of admitted symbols being fixed by a given signature.

Formal definition[edit]

A term rewriting rule is a pair of terms, commonly written as , to indicate that the left-hand side can be replaced by the right-hand side . A term rewriting system is a set of such rules. A rule can be applied to a term if the left term matches some subterm of , that is, if [note 3] for some position in and some substitution . The result term of this rule application is then obtained as ;[note 4] see picture 1. In this case, is said to be rewritten in one step, or rewritten directly, to by the system , formally denoted as , , or as by some authors. If a term can be rewritten in several steps into a term , that is, if , the term is said to be rewritten to , formally denoted as . In other words, the relation is the transitive closure of the relation ; often, also the notation is used to denote the reflexive-transitive closure of , that is, if or .[18] A term rewriting given by a set of rules can be viewed as an abstract rewriting system as defined above, with terms as its objects and as its rewrite relation.

For example, is a rewrite rule, commonly used to establish a normal form with respect to the associativity of . That rule can be applied at the numerator in the term with the matching substitution , see picture 2.[note 5] Applying that substitution to the rule's right hand side yields the term , and replacing the numerator by that term yields , which is the result term of applying the rewrite rule. Altogether, applying the rewrite rule has achieved what is called "applying the associativity law for to " in elementary algebra. Alternatively, the rule could have been applied to the denominator of the original term, yielding .

Termination[edit]

Beyond section Termination and convergence, additional subtleties are to be considered for term rewriting systems.

Termination even of a system consisting of one rule with a linear left-hand side is undecidable.[19] Termination is also undecidable for systems using only unary function symbols; however, it is decidable for finite ground systems. [20]

The following term rewrite system is normalizing,[note 6] but not terminating,[note 7] and not confluent:[21]

The following two examples of terminating term rewrite systems are due to Toyama:[22]

and

Their union is a non-terminating system, since . This result disproves a conjecture of Dershowitz,[23] who claimed that the union of two terminating term rewrite systems and is again terminating if all left-hand sides of and right-hand sides of are linear, and there are no "overlaps" between left-hand sides of and right-hand sides of . All these properties are satisfied by Toyama's examples.

See Rewrite order and Path ordering (term rewriting) for ordering relations used in termination proofs for term rewriting systems.

Graph rewriting systems[edit]

A generalization of term rewrite systems are graph rewrite systems, operating on graphs instead of (ground-) terms / their corresponding tree representation.

Trace rewriting systems[edit]

Trace theory provides a means for discussing multiprocessing in more formal terms, such as via the trace monoid and the history monoid. Rewriting can be performed in trace systems as well.

Philosophy[edit]

Rewriting systems can be seen as programs that infer end-effects from a list of cause-effect relationships. In this way, rewriting systems can be considered to be automated causality provers.[citation needed]

See also[edit]

- Critical pair (logic)

- Knuth–Bendix completion algorithm

- L-systems specify rewriting that is done in parallel.

- Referential transparency in computer science

- Regulated rewriting

- Rho calculus

Notes[edit]

- ^ This variant of the previous rule is needed since the commutative law A∨B = B∨A cannot be turned into a rewrite rule. A rule like A∨B → B∨A would cause the rewrite system to be nonterminating.

- ^ i.e. it considers more objects as a normal form of x than our definition

- ^ here, denotes the subterm of rooted at position , while denotes the result of applying the substitution to the term

- ^ here, denotes the result of replacing the subterm at position in by the term

- ^ since applying that substitution to the rule's left hand side yields the numerator

- ^ i.e. for each term, some normal form exists, e.g. h(c,c) has the normal forms b and g(b), since h(c,c) → f(h(c,c),h(c,c)) → f(h(c,c),f(h(c,c),h(c,c))) → f(h(c,c),g(h(c,c))) → b, and h(c,c) → f(h(c,c),h(c,c)) → g(h(c,c),h(c,c)) → ... → g(b); neither b nor g(b) can be rewritten any further, therefore the system is not confluent

- ^ i.e., there are infinite derivations, e.g. h(c,c) → f(h(c,c),h(c,c)) → f(f(h(c,c),h(c,c)) ,h(c,c)) → f(f(f(h(c,c),h(c,c)),h(c,c)) ,h(c,c)) → ...

References[edit]

- ^ Sculthorpe, Neil; Frisby, Nicolas; Gill, Andy (2014). "The Kansas University rewrite engine". Journal of Functional Programming. 24 (4): 434–473. doi:10.1017/S0956796814000185. ISSN 0956-7968.

- ^ Hsiang, Jieh, et al. "The term rewriting approach to automated theorem proving." The Journal of Logic Programming 14.1-2 (1992): 71–99.

- ^ Frühwirth, Thom. "Theory and practice of constraint handling rules." The Journal of Logic Programming 37.1 (1998): 95–138.

- ^ Clavel, Manuel, et al. "Maude: Specification and programming in rewriting logic." Theoretical Computer Science 285.2 (2002): 187–243.

- ^ Kim Marriott; Peter J. Stuckey (1998). Programming with Constraints: An Introduction. MIT Press. pp. 436–. ISBN 978-0-262-13341-8.

- ^ Robert Freidin (1992). Foundations of Generative Syntax. MIT Press. ISBN 978-0-262-06144-5.

- ^ Bezem et al., p. 7,

- ^ a b Book and Otto, p. 10

- ^ Bezem et al., p. 7

- ^ Baader and Nipkow, pp. 8–9

- ^ Alonzo Church and J. Barkley Rosser. Some properties of conversion. Trans. AMS, 39:472–482, 1936

- ^ Baader and Nipkow, p. 9

- ^ Baader and Nipkow, p. 11

- ^ Baader and Nipkow, p. 12

- ^ Bezem et al., p.11

- ^ M.H.A. Neumann (1942). "On Theories with a Combinatorial Definition of Equivalence". Annals of Mathematics. 42 (2): 223–243. doi:10.2307/1968867.

- ^ Martin Davis et al. 1994, p. 178

- ^ N. Dershowitz, J.-P. Jouannaud (1990). Jan van Leeuwen, ed. Rewrite Systems. Handbook of Theoretical Computer Science. B. Elsevier. pp. 243–320.; here: Sect. 2.3

- ^ M. Dauchet (1989). "Simulation of Turing Machines by a Left-Linear Rewrite Rule". Proc. 3rd RTA. LNCS. 355. Springer LNCS. pp. 109–120.

- ^ Gerard Huet, D.S. Lankford (Mar 1978). On the Uniform Halting Problem for Term Rewriting Systems (PDF) (Technical report). IRIA. p. 8. 283. Retrieved 16 June 2013.

- ^ Bernhard Gramlich (Jun 1993). "Relating Innermost, Weak, Uniform, and Modular Termination of Term Rewriting Systems". In Voronkov, Andrei. Proc. International Conference on Logic Programming and Automated Reasoning (LPAR). LNAI. 624. Springer. pp. 285–296. Here: Example 3.3

- ^ Y. Toyama (1987). "Counterexamples to Termination for the Direct Sum of Term Rewriting Systems" (PDF). Inf. Process. Lett. 25 (3): 141–143. doi:10.1016/0020-0190(87)90122-0.

- ^ N. Dershowitz (1985). "Termination". In Jean-Pierre Jouannaud. Proc. RTA (PDF). LNCS. 220. Springer. pp. 180–224.; here: p.210

Further reading[edit]

- Baader, Franz; Nipkow, Tobias (1999). Term rewriting and all that. Cambridge University Press. ISBN 0-521-77920-0. 316 pages. A textbook suitable for undergraduates.

- Marc Bezem, Jan Willem Klop, Roel de Vrijer ("Terese"), Term Rewriting Systems ("TeReSe"), Cambridge University Press, 2003, ISBN 0-521-39115-6. This is the most recent comprehensive monograph. It uses however a fair deal of non-yet-standard notations and definitions. For instance, the Church–Rosser property is defined to be identical with confluence.

- Nachum Dershowitz and Jean-Pierre Jouannaud "Rewrite Systems", Chapter 6 in Jan van Leeuwen (Ed.), Handbook of Theoretical Computer Science, Volume B: Formal Models and Semantics., Elsevier and MIT Press, 1990, ISBN 0-444-88074-7, pp. 243–320. The preprint of this chapter is freely available from the authors, but it is missing the figures.

- Nachum Dershowitz and David Plaisted. "Rewriting", Chapter 9 in John Alan Robinson and Andrei Voronkov (Eds.), Handbook of Automated Reasoning, Volume 1.

- Gérard Huet et Derek Oppen, Equations and Rewrite Rules, A Survey (1980) Stanford Verification Group, Report N° 15 Computer Science Department Report N° STAN-CS-80-785

- Jan Willem Klop. "Term Rewriting Systems", Chapter 1 in Samson Abramsky, Dov M. Gabbay and Tom Maibaum (Eds.), Handbook of Logic in Computer Science, Volume 2: Background: Computational Structures.

- David Plaisted. "Equational reasoning and term rewriting systems", in Dov M. Gabbay, C. J. Hogger and John Alan Robinson (Eds.), Handbook of Logic in Artificial Intelligence and Logic Programming, Volume 1.

- Jürgen Avenhaus and Klaus Madlener. "Term rewriting and equational reasoning". In Ranan B. Banerji (Ed.), Formal Techniques in Artificial Intelligence: A Sourcebook, Elsevier (1990).

- String rewriting

- Ronald V. Book and Friedrich Otto, String-Rewriting Systems, Springer (1993).

- Benjamin Benninghofen, Susanne Kemmerich and Michael M. Richter, Systems of Reductions. LNCS 277, Springer-Verlag (1987).

- Other

- Martin Davis, Ron Sigal, Elaine J. Weyuker, (1994) Computability, Complexity, and Languages: Fundamentals of Theoretical Computer Science – 2nd edition, Academic Press, ISBN 0-12-206382-1.

External links[edit]

| Look up rewriting in Wiktionary, the free dictionary. |

![{\xrightarrow[{R}]{}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/44ad78e39035a1bb54795ab7ee3ab7a8c22fc3f0)

![{\displaystyle s\,{\xrightarrow[{R}]{}}\,t}](https://wikimedia.org/api/rest_v1/media/math/render/svg/6ff52e576fc28995062790665547fc4267041607)

![(\Sigma ^{*},{\xrightarrow[{R}]{}})](https://wikimedia.org/api/rest_v1/media/math/render/svg/6bdba491861e511a0afecdf7ea7f69a7488c2b06)

![{\xrightarrow[{R}]{*}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/11ac1034cc95f5afac4dadd2a548bcc2ee7d3e2a)

![{\displaystyle x\,{\xrightarrow[{R}]{*}}\,y}](https://wikimedia.org/api/rest_v1/media/math/render/svg/49f5d2c1428b6a5dd5614c78c301bbedfcc25a6d)

![{\displaystyle uxv\,{\xrightarrow[{R}]{*}}\,uyv}](https://wikimedia.org/api/rest_v1/media/math/render/svg/e613fb20fc028cc4eda67c1fac4048aab28ca4c8)

![t=s[r\sigma ]_{p}](https://wikimedia.org/api/rest_v1/media/math/render/svg/fde2c4b8e715002250b654705baa6b391ba24b1e)

![{\displaystyle t_{1}\,{\xrightarrow[{R}]{}}\,t_{2}\,{\xrightarrow[{R}]{}}\,\ldots \,{\xrightarrow[{R}]{}}\,t_{n}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c8cde7d10d70e78fc24e1bbe97ac382b3016a6f7)

![{\displaystyle t_{1}\,{\xrightarrow[{R}]{+}}\,t_{n}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/36c737bc2ef19f69087867a00615f496575a6841)

![{\xrightarrow[{R}]{+}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/4a543f76b81ed809930686a4ab3d8c0a288fe23f)

![{\displaystyle s\,{\xrightarrow[{R}]{*}}\,t}](https://wikimedia.org/api/rest_v1/media/math/render/svg/76d4825e0d30458f3e2bed93beb7211789d1e195)

![{\displaystyle s\,{\xrightarrow[{R}]{+}}\,t}](https://wikimedia.org/api/rest_v1/media/math/render/svg/512ce23c7272ae2b4b81396ce0bb03840254fd75)

![s[r\sigma ]_{p}](https://wikimedia.org/api/rest_v1/media/math/render/svg/8163a64001c350a650d49f55833a1fd3a887720e)